I am currently implementing some classes for the new “TigerHeart” graphics engine using the OpenGL pipeline. And it is the right way because I am a beginner in OpenGL, which is a little bit more different from Direct3D than I thought. Since I am a professional in programming with Direct3D I can judge how to design interfaces, classes and their interaction so they can be used for both APIs.

A good example is shader programming: In Direct3D vertex and pixel shaders can be applied roughly independent from another. But using OpenGL you have to create a program object, to which multiple shaders can be attached. Afterwards this “program” must be linked and applied to utilize the shaders.

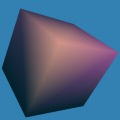

You can see my current progression state at both images above. It is a cube model with shared, rounded vertex normals, what is not a reasonable assignment but a good test. The first one shows the common per vertex diffuse and specular lighting. It has got a poor quality because both lighting colors have to be linearly interpolated between the eight vertices.

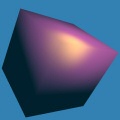

On the second image you can see the same calculations but relocated to the pixel shader. There is no need for a normal map unless you want to add details to the object without appending vertices. The quality seams to be nearly perfect.

The mesh is rendered using vertex buffer objects (VBO), which is the fastest way to draw complex models using OpenGL, and shaders are compiled with GLSL. This language is comparable to Microsoft’s HLSL but have got some design differences. For example the compiler is integrated into the graphics driver and there is no possibility to specify shader model targets.